To totally unlock this section you need to Log-in

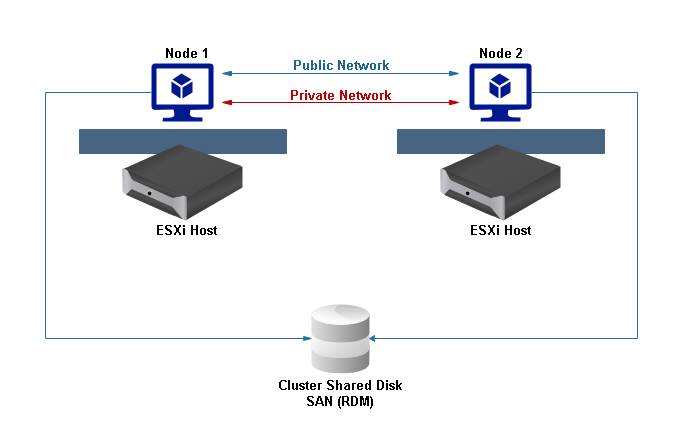

In some cases (as a rule in clustering scenarios) it may be necessary to share the same disk (vmdk or RMD) between 2 (or more) virtual machines (VMs) on VMWare ESXi. The most optimal way is to use the vmdk disk physically located on the shared storage or locally on the ESXi host. If you want to use the shared drive on different ESXi hosts, you can only use the shared VMFS (datastore) or SAN/iSCSI storage. A shared disk is a VMDK file that two or more virtual machines can read and write at the same time.

Virtual disks, which must be available simultaneously in several VMWare virtual machines, use the Multi-writer technology (available in VMware ESXI 5.5 and higher). In most cases, this mode of operation of shared disks is used in cluster solutions Oracle RAC and Microsoft MSCS cluster.

I’m not sure how VMware share virtual disks between virtual machines. But according to Windows Server cluster definition in Windows Server 2008: To support clustering, the disk must: Be connected through Serial Attached SCSI (SAS), iSCSI, or Fibre Channel. Be visible to all servers in the cluster. SCSI3-PRs native support enables customers to create a new or move an existing Windows Server Failover Cluster (WSFC) with up to 6 nodes and 64 shared disks to VMware vSAN backend VMDKs. Guide: VMWare ESX – Shared Windows Cluster Disks Posted by wakamang on August 14, 2009 This post will guide you trough the disk creation proces that needs to be followed to let virtual machines use these disks in a Windows 2003 cluster environment. Cluster infrastructure improvements – With Windows Server 2019, the CSV cache is enabled by default to boost the performance of virtual machines running on Cluster Shared Volumes. Additionally, there have been enhancements to allow the Windows Failover Cluster to have more ability and logic to detect problems with the cluster and to. We are in need of expanding our current quorum disk size by about 500GB. The disk is currently shared by 2VMs which are on 2 different ESXI hosts and connected to our iSCSI SAN via physical compatibility mode in vmware for our SQL databases using Failover Cluster manager, server 2012.

The main limitations of shared VMWare disks in Multi-Writer mode:

- You won’t be able to perform the online migration of such VMs (neither vMotion nor Storage vMotion). You can migrate between ESXi hosts only powered off virtual.

- If you try to write data to such a vmdk from the guest operating system, for example, create a folder, this folder will be visible only on the host that created it. Those, the Multi-Writer VMDK technology is needed just for clustering, and it’s impossible to use it as a shared disk with automatic file synchronization.

- You cannot expand such a VMDK disk online.

By default, VMware vSphere doesn’t allow multiple virtual machines to open the same virtual machine disk (VMDK) in read and write mode. This protects data stored on a virtual disk from damage caused by multiple write programs on non-cluster file systems.

The Multi-Writer option ensures that a cluster-enabled application (Oracle RAC, Microsoft MSCS) when writing from two or more virtual machines doesn’t cause data loss.

Suppose the shared external storage (connected to each ESXi host using iSCSI or Fibre Channel SAN) has already presented to all VMWare ESXi hosts, which are running the two VMs (node1 and node2) you want to add a shared virtual drive. On both virtual machines, you need to add a new SCSI controller.

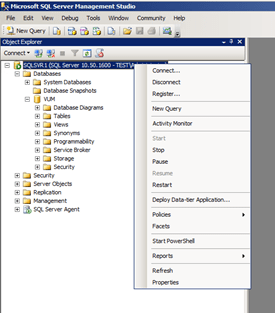

In the vSphere Client inventory select first virtual machine (Node 1), right click on it and select Edit Settings.

To add a new virtual device select the SCSI controller in the dropdown list and press Add button.

As a type of SCSI controller, select LSI Logic SAS (be sure to create a new SCSI controller, don’t use a SCSI 0 controller).

Now you need to choose the desired SCSI Bus sharing mode:

Virtual – if you want to share a virtual disk between VMs on the same ESXi host;

Physical – used when you need to share vmdk file between VMs on different ESXi hosts.

Press OK. Next, you need to add a new virtual drive on the first VM (New Device > New Hard Disk > Add) with the following disk settings:

- VM Storage Policy: optional.

- Location: you need to select a shared datastore name, that will store the vmdk file;

- Disk Provisioning: select Thick provision eager zeroed (another disk provisioning modes are not suitable). Note that you can convert thick provision lazy zeroed disk to Thin.

- Sharing: Multi-writer.

- Virtual Device Node: select created earlier SCSI controller.

- Disk mode: Independent – Persistent – in this mode, you can’t create snapshots for a virtual drive you want to share.

In the same way, you need to add a new SCSI controller to another virtual machine (Node 2). After that in the settings of the second virtual machine you need to add a new disk (Existing Hard Disk).

In the menu that appears, select the VMFS Datastore, which stores the shared disk you created earlier. In the submenu, select the name of the first virtual machine and in the middle pane select the desired vmdk disc file.

You also need to select the Sharing mode – Multi-Writer for this disk.

If you want to use more than one shared disk on VMware, keep in mind that each of them does not require the addition SCSI controller. One controller can serve up to 16 different drives.

Make sure that you use the same SCSI device address for a shared vmdk disk on both virtual machines. Therefore, if you added SCSI Controller 1 when creating a SCSI controller, you should select it.

For example, if you have a new address SCSI(1:0) on VM (node1) for a new disk (this means the first disk on the second SCSI controller), you should use the same address SCSI(1:0) for the shared disk on the second VM Business in a box setup exe malware. (node2).

In ESXi versions, prior to ESXi vSphere 6.0 update 1, MultiWriter mode for vmdk is also supported, but you cannot enable it from the vSphere Client interface. You can add a multi-writer flag by shutdown the VM and manually editing the vmx file of the virtual machine, adding the following line to the end of the file:

You can also add this parameter in the VM properties: Options > General > Configuration Parameters: Scsi1:0:sharing 'multi-writer'. Easeus data recovery for mac serial number.

Vmware Windows Cluster Shared Disk Recovery

After changing the virtual machines settings, connect to the console of the guest OS virtual machine. In this case, the VMs running Windows Server, so you can remotely connect to them using RDP (how to enable RDP remotely or using the RDCMan manager). Start the Computer Management console and expand Storage > Disk Management section. Right click and select Rescan Disk.

The system detects the new disk and offers to initialize it. Select the desired partition table (MBR or GPT), create a new partition and format it. Similar operations must be performed on the second VM. After these settings, both VMs use shared disk. Now you can proceed to set up a cluster solution based on Oracle RAC and Microsoft Cluster Services (MSCS).

When trying to migrate a VM with a connected vmdk disk in MultiWriter mode, an error will appear: virtual machine is configured to use a device that prevents the operation: Device SCSI controller X is an SCSI controller engaged in bus-sharing. To resolve this issue: turn off the VM and perform cold vMotion.

Share VMDK between VMs (Multi-Writer) without Clustered File System

The process to follow to share a VMDK between two VMware VMs in Multi-Writer mode is the same as the above procedure (in addition be sure to use a VMWare Paravirtual SCSI controller type when adding it to both VMs), but in this case, as it not a recommended procedure, we will see a strange behaviour.

Before going directly to the main difference on using non clustered file system while sharing a VMDK between two VMs, we have to note that on the second VM and all successive VMs, you will see the VMDK added (using Existing Hard Disk option) and the size is greyed out since you can’t change it. An important note is that adding the multi-writer VMDK will not be recognized as a multi-writer VMDK, so the same settings need to be configured on the second VM as well including sharing and disk mode configuration.

Once the VMDK is added, on the first VM open Disk Management MMC console and Online, Initialize, and format the disk.

The 'strange' behaviour is now: let's say that, on the source VM, we create a SharedVMFolder on the new volume. After bringing Online the disk on the second VM, we create the Test folder again on the source VM to show the behavior.

To understand how the behavior of non-cluster VMs work, we have to note that since we bring Online the disk on the second VM before the second folder is created, the Test folder on the source VM will be not visible on the second VM.

To show the Test folder also on the second VM we will have to take Offline and Online the disk on the second VM.

Ahnlab v3 wikipedia. Of course, this is not a supported use of this functionality. However, this is a really cool hack if you will on using the multi-writer disk and can be useful to 'copy' a lot of data between VMs but we have not a clustered physical storage available.

We could even use, even if not recommended, this approach for DEV or TEST environments using a VMware ESX infrastructure, by creating a simple scheduled task on the second VM, for example, doing an Offile/Online action using a Powershell or diskpart, with Powershell, method.

Vmware Windows Cluster Shared Disk Drive

For example, we could use a scheduled task, on the second VM, with one of the following codes:

Vmware 6.5 Windows Cluster Shared Disk

As alternative to the diskpart/Powershell approach above we could use the following Powershell-only approach to set all Offline disks available to Online status:

Vmware Windows Cluster Shared Disk Command

To check which are the availble Offline disks on a system we can use the following commands/cmdlet in Powershell: